Look up the word “odor” in a dictionary, and you’ll find one definition to be, “a distinctive smell, especially an unpleasant one.” But in our world of digital olfaction, odor is much more complex.

Scientifically speaking, odor consists of one or more volatilized chemical compounds that an object releases depending on temperature. When temperature increases, evaporation of these compounds is facilitated, as you can see by the illustration below.

The release of these molecules is the object broadcasting its scent information out into the world.

The release of these molecules is the object broadcasting its scent information out into the world.

Now, the effect of this scent on a human being is where odor becomes even more complicated. Scent stimulates the neural system of the olfactory bulb and, combined with information from other regions of the brain, is perceived differently by every individual based on their past experiences and personal feelings. That’s why, for example, maybe every time you smell the scent of Gain laundry detergent, you also remember your favorite sweater from childhood.

This is why, for years, the digitization of the human sense of smell has been heralded as impossible.

Not at Aryballe. In fact, we’ve been successful at mimicking the human sense of smell by binding odor molecules to biosensors and producing a unique odor signature. We then further improve the capture and analysis of odor data by using machine learning on two different levels:

- To improve the signal-to-noise ratio, or reducing the noise and/or increasing the signal

- For pattern recognition, and being able to consistently replicate the same odor over and over again

Two things have to happen before machine learning becomes involved, however, including:

Normalization of the data

First, a sample of ambient air is collected. Similar to the replication of vision in which we might have to transform a face within the same frame, the same contrast and the same orientation, in olfaction, we first have to transform the odor.

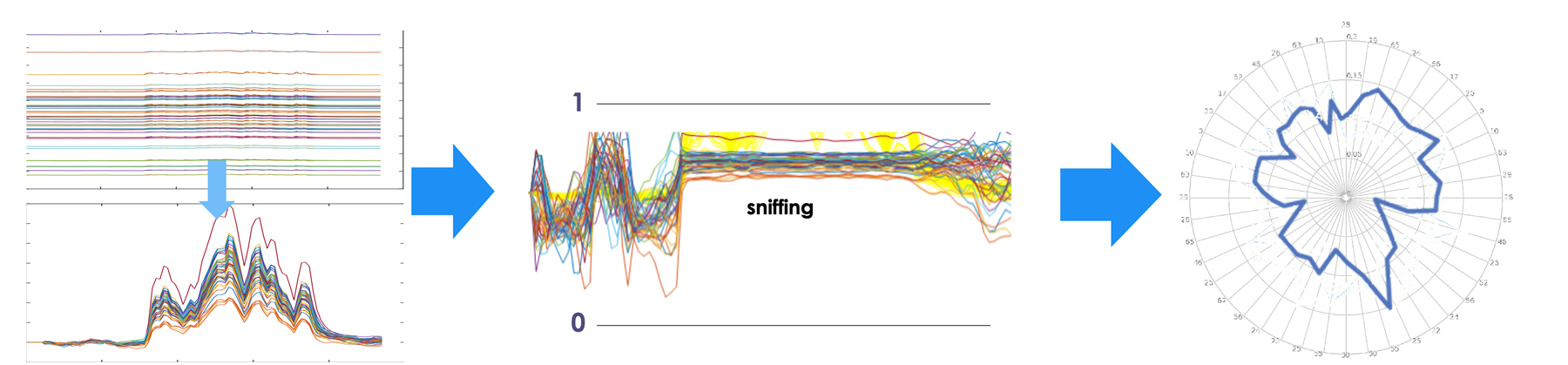

We do this by subtracting any responses of the peptides to odors present in the ambient air so that we collect an odor signature that represents the odor itself – not the odor plus any additional odor molecules that might be present in the surrounding air. The chart below illustrates how we do this as an odor interacts with our biosensors.

The third curve between 0 and 1 is the normalized response of all the biosensors in the device, with the plateau of all the curves showing the constant normalized values. This plateau, which represents the biosensors’ interaction with the odor in question, is then analyzed in real time to extract the most stable period of the biosensors response (i.e. equilibrium between compounds and biosensors which will characterize the odor identity perceived by the biosensors).

Although the total measurement may take a few seconds, by analyzing the most stable point, we are able to capture the unique odor signature, represented as a radar chart. This final information, along with the intensity of the signal is then used for machine-learning.

Environmental signal compensation

When measuring a phenomenon, it is common to have another undesirable phenomenon whose properties in the frequency domain are close to those of the useful signal. The similarity of these two signals can make it difficult to distinguish the subject of measurement from the noise.

Take photography, for instance, and image stabilization. In a still image, small vibrations of the camera or the object can create a blur – an undesirable input. The photographer can correct this with image processing.

In digital olfaction, the undesirable signal is often H2O molecules, which are everywhere, and at various concentrations, which can compromise the signature of the odor we want to measure. Therefore, the correction we’d make, similar to image processing, would involve an integrated humidity sensor.

That’s just one example of how we have to compensate for environmental factors, and a step necessary before any machine learning is applied. But each one is vital for improving decision making with odor data.

In our next blog post, we’ll discuss exactly how machine learning within digital olfaction works, and in the meantime, if you have any questions please contact us via our contact page.